Cellular app growth may very well be an enchanting subject to unearth the true potential of sturdy functions and what goes behind closed doorways. These days, customers take apps not only for face worth but additionally to dive deep into their performance, interface, and person expertise. Loads goes into elevating the person expertise. By way of cutting-edge and state-of-the-art Technology, companies incorporate new options that entice the viewers and provide novel experiences. Face detection is likely one of the many elating options that have a tendency to reinforce person expertise. By way of this weblog, we’ll concentrate on the creation of a sturdy face detection utility utilizing React Native by leveraging the true potential of Facenet for unparalleled effectivity and accuracy.

This text sheds mild on the nuanced challenges of integrating refined machine studying functionalities like Facenet into the React Native framework. Confronting the complexities arising from Facenet’s intricate nature and demanding computational necessities, the mission navigates these obstacles with finesse by way of the strategic implementation of native bridging. By seamlessly combining native Android app growth providers and its capabilities with the adaptability of React Native, we will strike a steadiness between innovation and practicality.

Why Facenet

FaceNetis very distinctive as IT stands out in face-related duties resembling recognition, identification verification, and grouping related faces. Its uniqueness lies in its strategy to those duties. FaceNet generates “embeddings” – numerical signatures that encapsulate a face’s traits, reasonably than inventing new recognition or verification strategies. These embeddings along with established methods act like k-Nearest Neighbors for recognition or clustering algorithms for grouping. FaceNet’s core power is in creating these embeddings, that use a deep complicated neural community skilled to hyperlink embeddings’ distances with facial similarities. These are then optimized by way of particular picture preparations.

The Want for Bridging

Builders use React Native for cross-platform app growth however nonetheless this platform has its limitations and challenges. IT struggles with computationally intensive or hardware-intensive duties like real-time face detection. Facenet requires extra processing energy and deeper {hardware} entry than what a normal React Native setup gives.

The aim and precise motive behind bridging is to handle this subject by enabling coding in native languages (Swift for iOS, Kotlin for Android) and integrating these with the React Native codebase. This methodology totally makes use of Facenet’s capabilities on iOS and Android inside a React Native utility.

Overview of the Utility

The appliance demonstrates the efficient integration of Facenet’s sturdy face detection in a cross-platform React Native app. IT reveals how Facenet may be utilized inside native Android and iOS environments and bridged to React Native. This integration affords a responsive person expertise.

The app combines Facenet’s correct and quick face detection with React Native’s user-friendly interface and cross-platform effectivity.

Technical Implementation

-

React Native

Initiating Face Detection

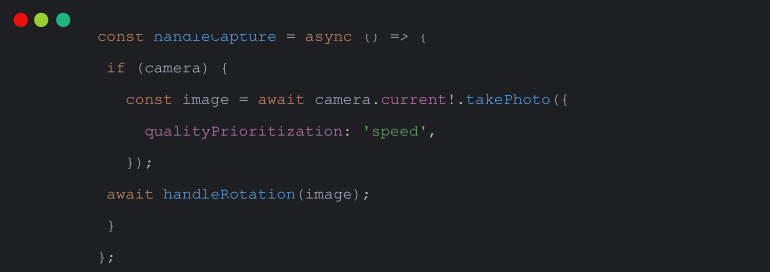

The face detection in our app begins when a person clicks ‘Scan Face‘. This triggers operations that combine React Native’s performance with native modules.

React Native Imaginative and prescient Digital camera

‘Scan Face‘ prompts the React Native Imaginative and prescient Digital camera, a library environment friendly for real-time picture and video seize in React Native apps. IT’s essential for acquiring high-quality pictures for correct face detection.

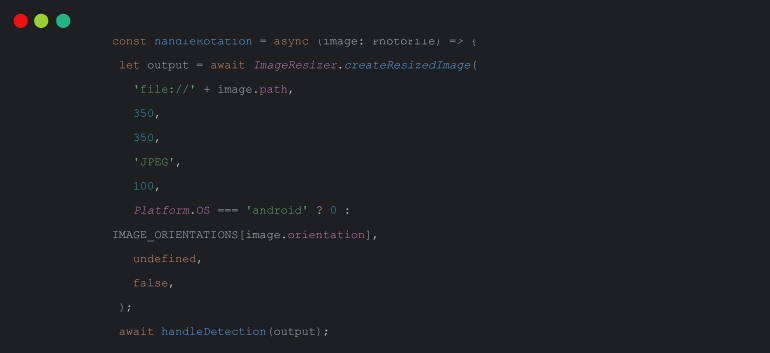

Picture Processing: Resizing and Orientation

After seize, the app processes the picture for optimum detection. This contains resizing and adjusting orientation. The handleRotation operate, as proven under, performs a key position on this course of:

Right here, ImageResizer.reateResizedImage is used for resizing to the wanted dimensions and for correcting orientation. This occurs after contemplating variations in iOS and Android picture dealing with.

Detecting Face in Picture

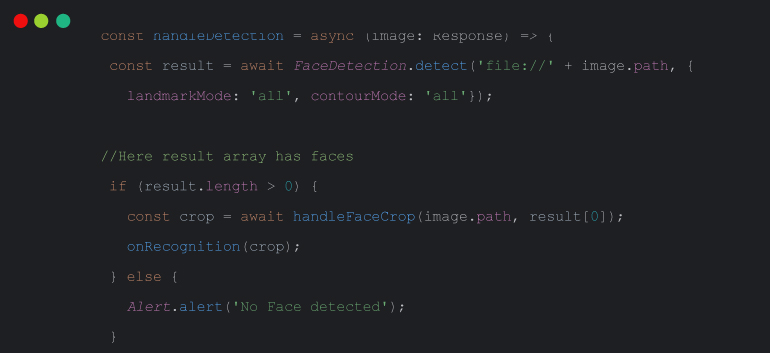

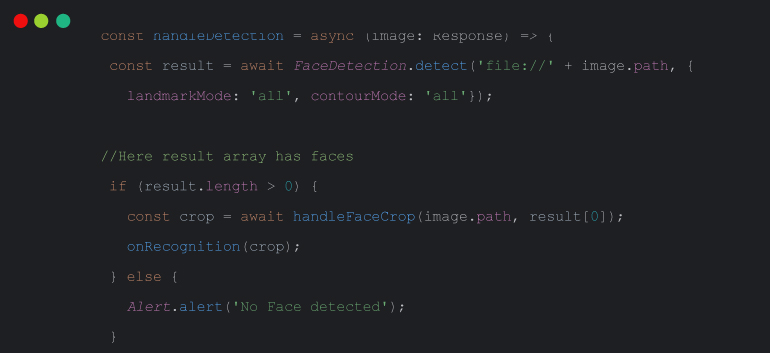

With the picture processed, the following essential step is to detect faces inside IT. The handleDetection operate is central to this course of:

Right here, the FaceDetection.detect operate from the @react-native-ml-kit/face-detection library is used to establish faces within the picture, capturing all related landmarks and contours. If a face is detected, the app proceeds to crop the picture across the detected face and proceeds with recognition processes. In any other case, IT informs the customers and sends alerts or notification for no face detection.

Crop Picture

After detecting a face within the picture, the handleFaceCrop operate is known as. This operate calculates the exact area of the detected face within the unique picture and makes use of the react-native-photo-manipulator library to crop the face.

The ensuing cropped picture is then utilized in subsequent processes.

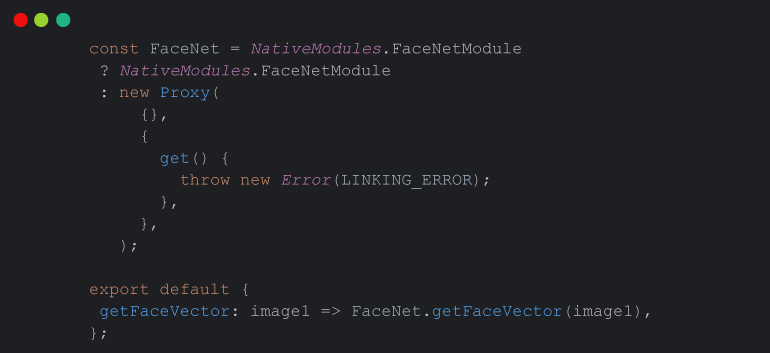

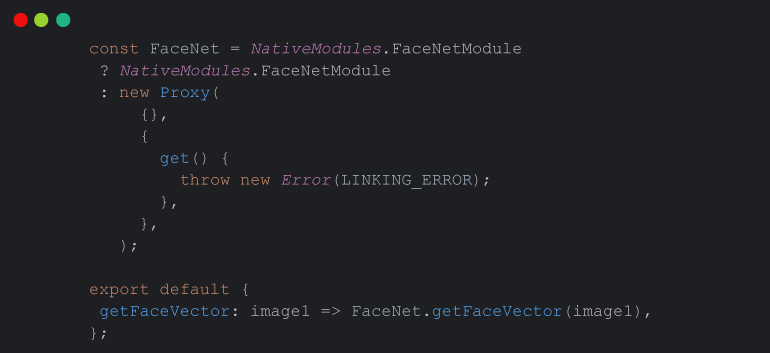

The FaceNet module interfaces with the native FaceNet implementation to retrieve face vectors. This cross-platform strategy permits the app to work seamlessly on each Android and iOS gadgets.

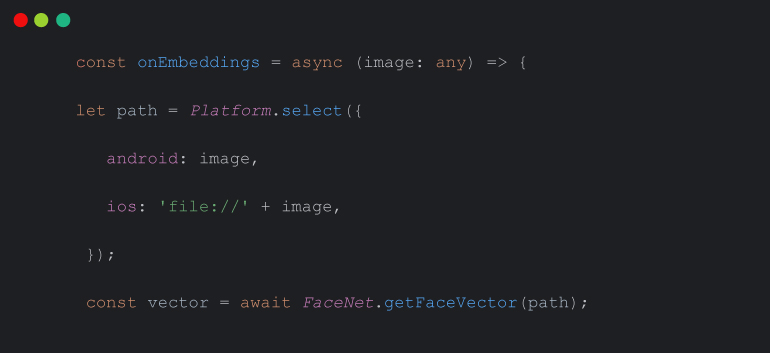

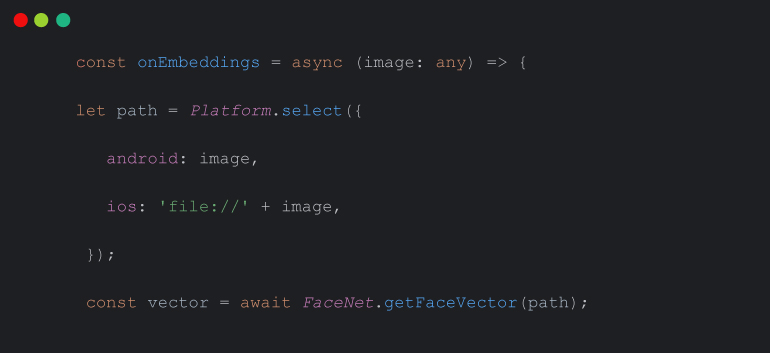

Within the onEmbeddings operate, the face vector is obtained from the cropped face picture, and platform-specific dealing with is utilized if mandatory. When you pay money for the face vector, IT is prepared for added processing.

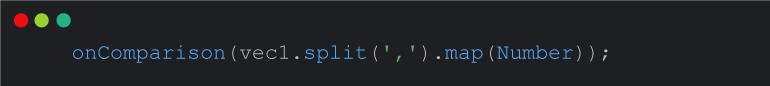

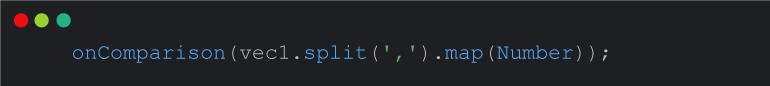

After getting face vector, we’ll obtain a JSON object, in json object we now have a JSON array information.We are going to cut up the array and convert IT in numeric array. Then we’ll go the numeric checklist to onComparison operate to check the extracted vector.

Evaluate Vectors

Within the utility, evaluating saved vectors with extracted vectors is a vital section.

Right here’s a step-by-step breakdown of the comparability course of:

Comparability Features

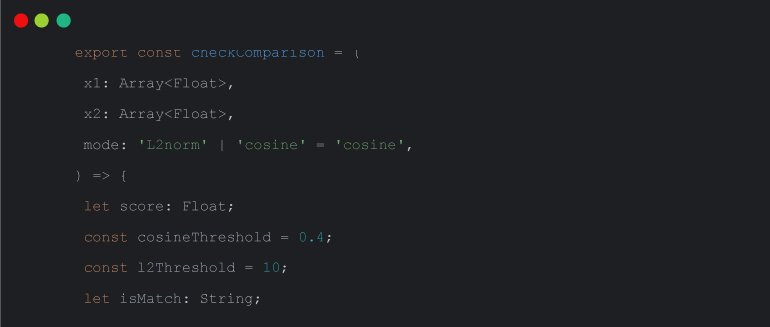

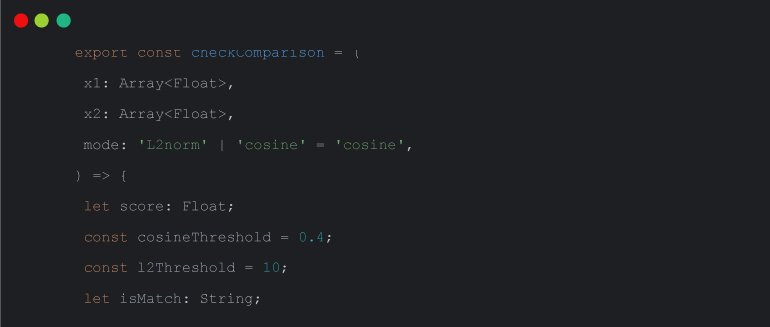

checkComparison

- Inputs: Takes two face vectors (x1 and x2) and a mode (‘L2norm‘ or ‘cosine‘) for comparability.

- Initialization: Units up variables for the rating, thresholds for cosine and L2 norm, and a match indicator.

- Calculation:

- Cosine Similarity: If mode is ‘cosine‘, calculate similarity rating. A better rating implies a greater match. IT compares the rating with the cosine threshold to find out if there’s a match.

- L2 Norm: If mode is ‘L2norm‘, calculate L2 norm share. A decrease rating signifies a greater match. IT compares the rating with the L2 threshold to resolve on a match.

- Output: Returns an object with the rounded rating and a boolean indicating a match or not.

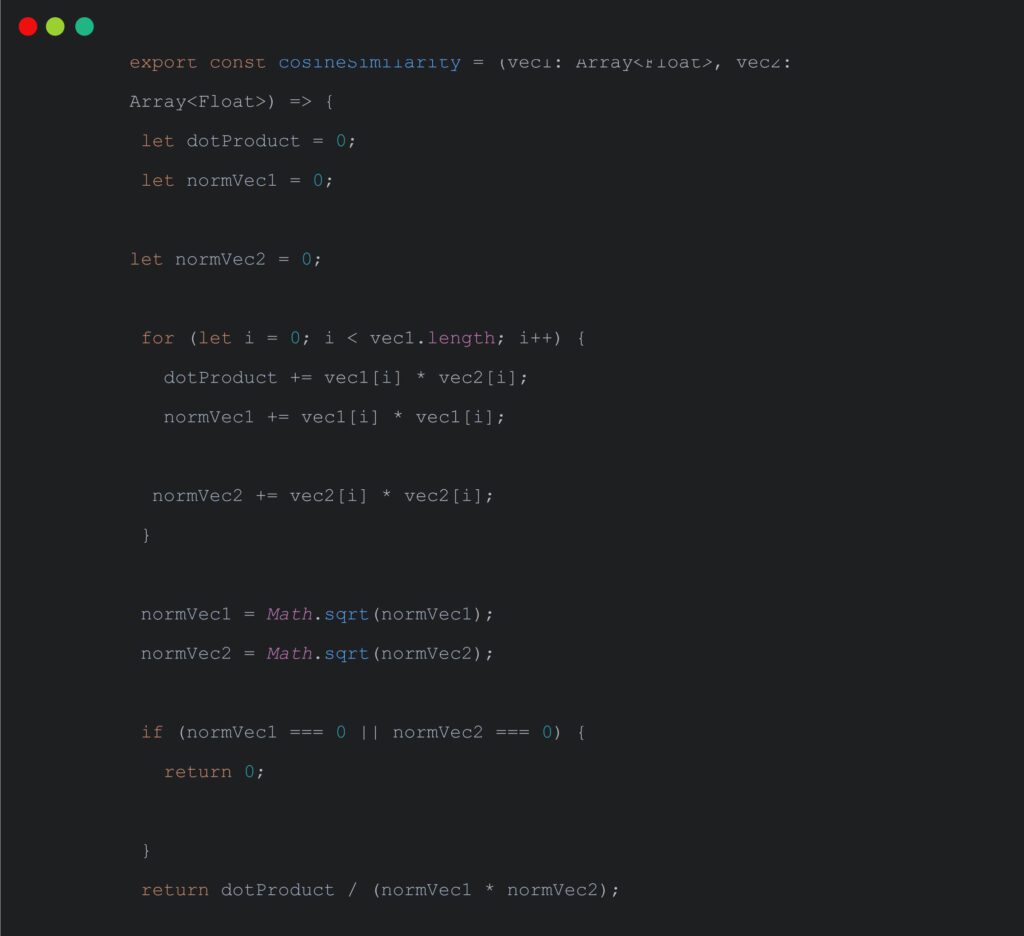

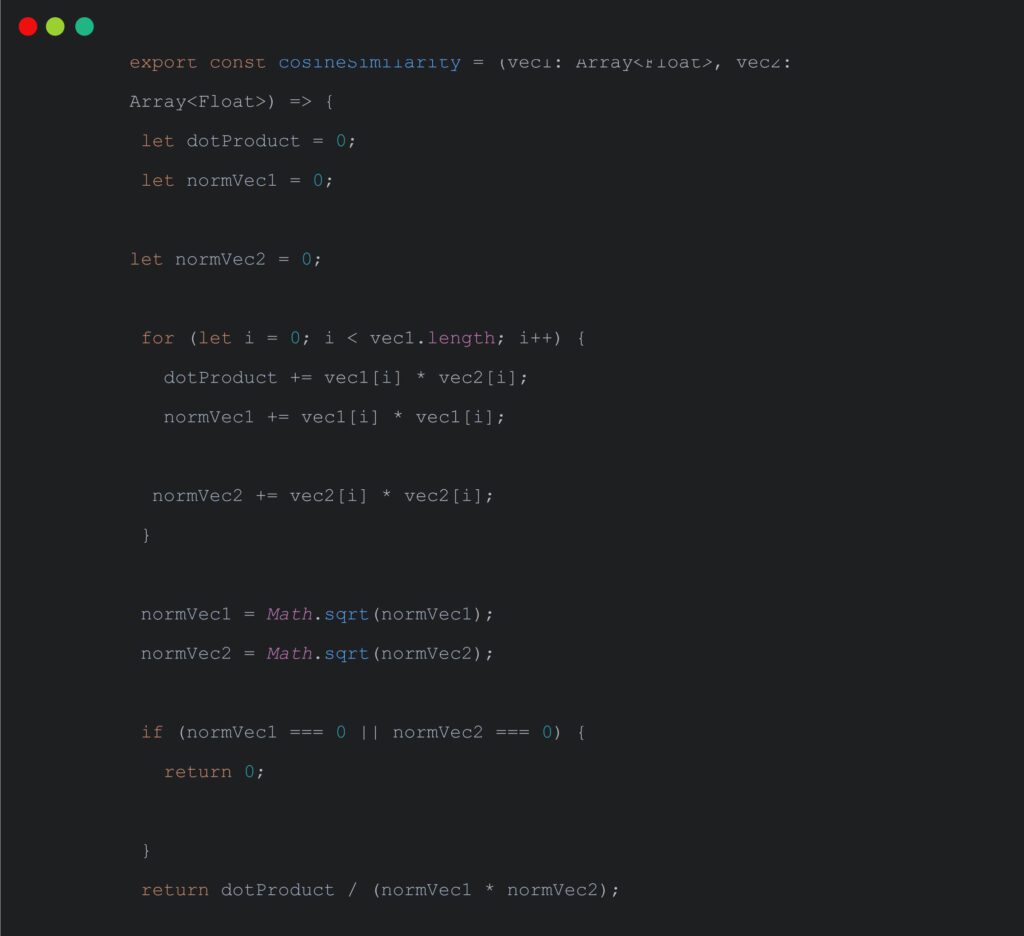

cosineSimilarity

- This operate calculates the cosine similarity between two vectors vec1 and vec2.

- IT computes the dot product of the vectors and the norms of every vector.

- If both of the vector norms is zero (to keep away from division by zero), IT returns 0.

- In any other case, IT returns the cosine similarity rating.

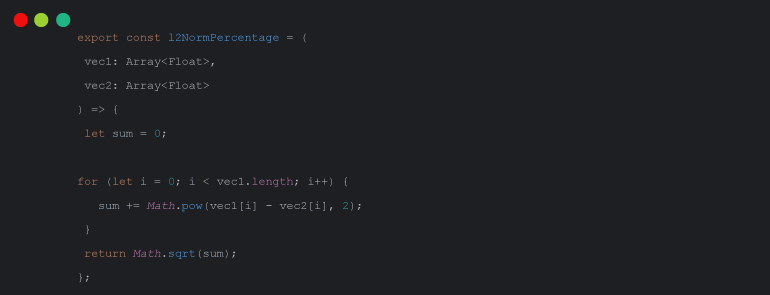

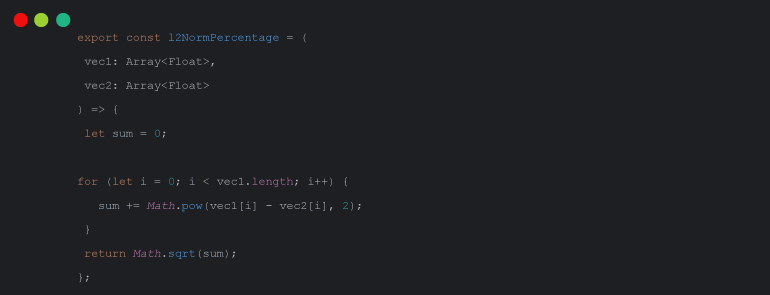

l2NormPercentage

- This operate calculates the L2 norm share between two vectors vec1 and vec2.

- IT computes the sum of squared variations between corresponding parts of the vectors.

- The result’s the sq. root of the sum, which represents the L2 norm share.

The mixture of those features offers method to each cosine similarity and L2 norm-based comparisons between face vectors. IT then tends to supply flexibility in figuring out whether or not two vectors signify the identical individual.

-

Android Native

On this state of affairs, we implement a facenet mannequin in android native to extract the face vector. We’re calling getFaceVector in react native and by utilizing bridging we’ll name this methodology in android native.

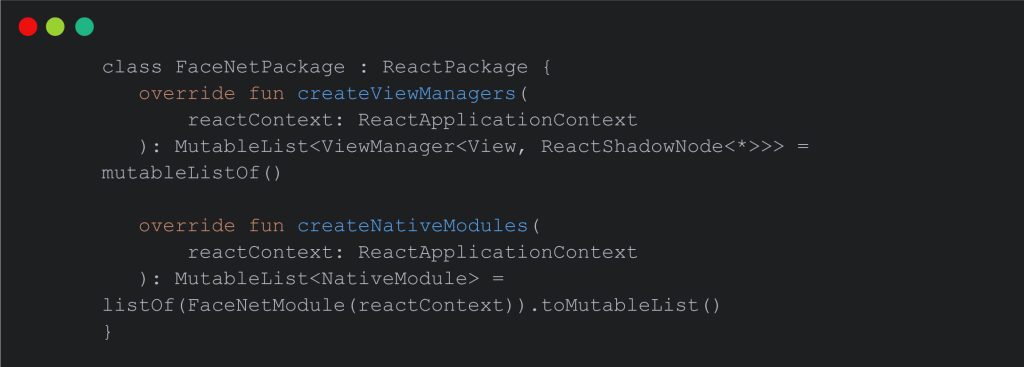

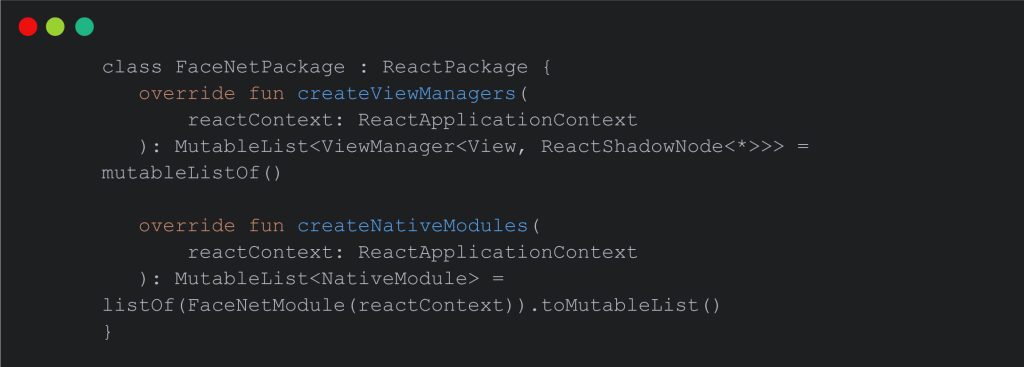

Right here is implementation of bridging in android native.

IT’s a bridge between our Android native code and our React Native JavaScript code. IT permits customers to register and expose native modules (resembling FaceNetModule) for use in our React Native utility.

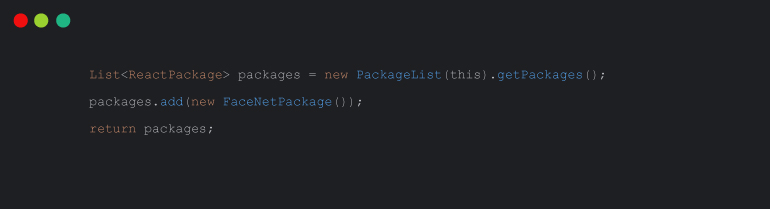

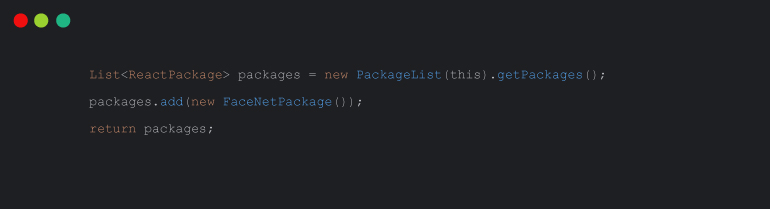

We are going to register our class in MainApplication.java

-

Fetch Face Vector in Android

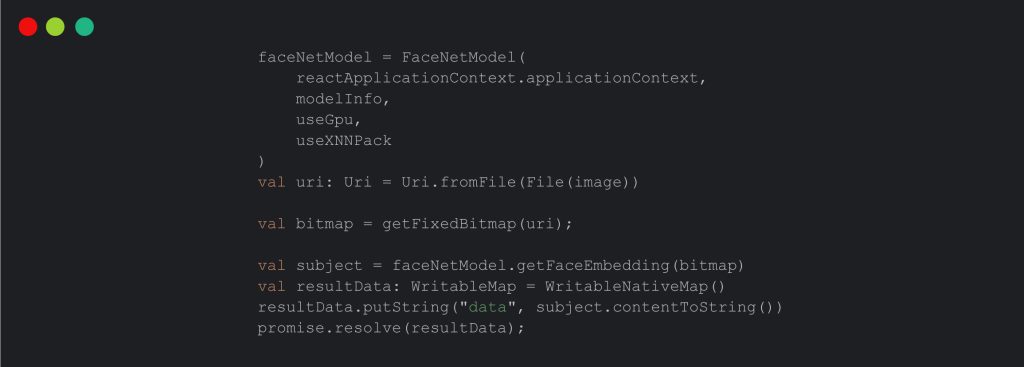

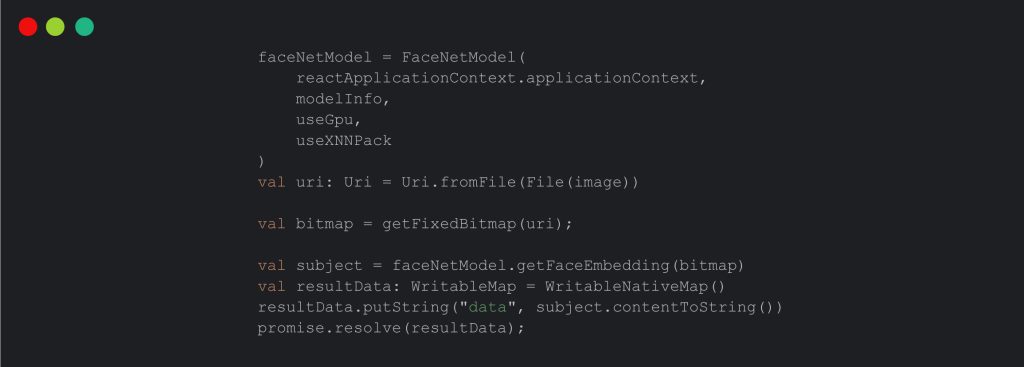

We are going to initialize the FaceNetModel, which is chargeable for face recognition. IT units the mannequin Information, GPU utilization, and XNNPack utilization. This initialization ensures that the face recognition mannequin is prepared to be used.

That is the implementation of our code:

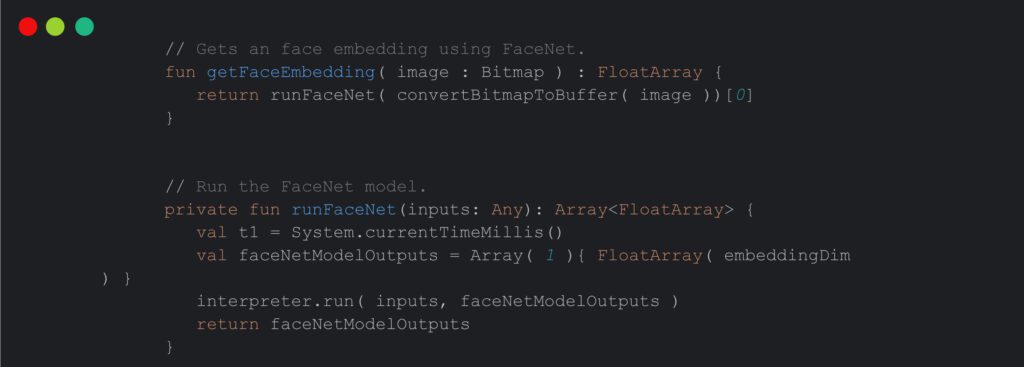

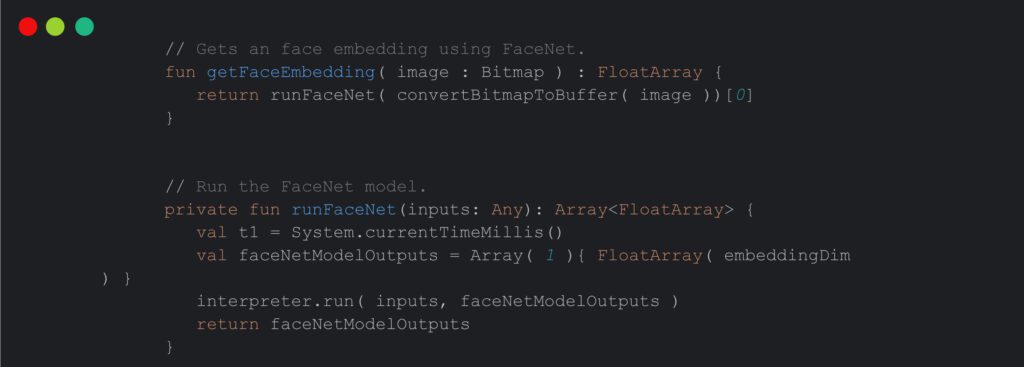

FaceNetModel accommodates all configurations and processing strategies. We’re utilizing tensorflow lite facenet. FaceNet in easy phrases may very well be described as a deep and complicated, neural community that helps to extract options individual’s face picture. This Technology surfaced in 2015 with the exhausting work of Google researchers Schroff et al.

FaceNet captures the image of a face and treats IT as enter. IT then outputs or generates a vector of 128 numbers. These numbers or vectors present a very powerful or distinguished options of a face. In machine studying terminology, we name IT embedding.

Right here is the implementation of fetching face vectors.

-

IOS Native

Now we’ll begin implementing a facenet mannequin in iOS native to extract the face vector, we’re calling getFaceVector in react native and by utilizing bridging we’ll name this methodology in iOS native.

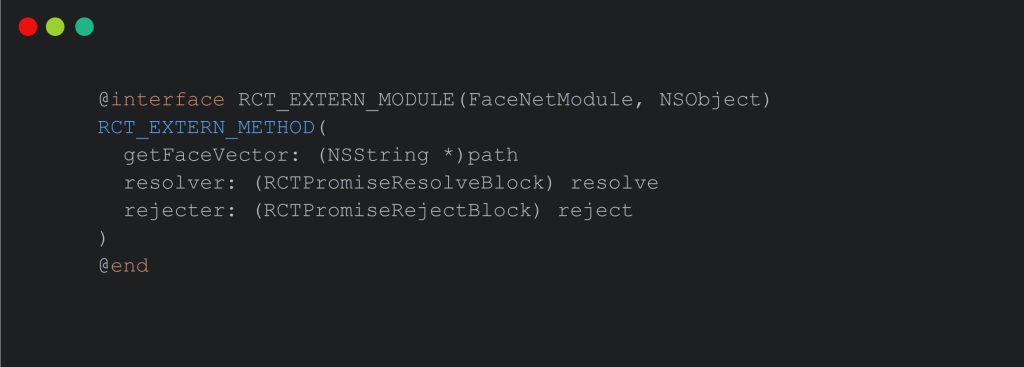

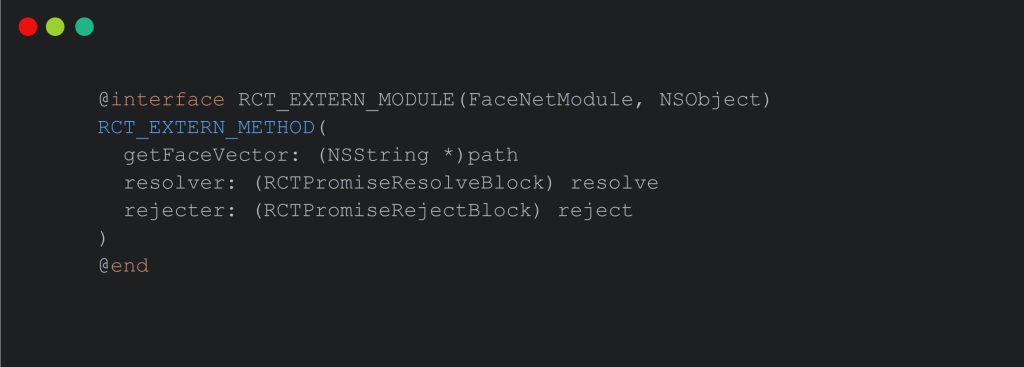

Right here is implementation of bridging in iOS native.

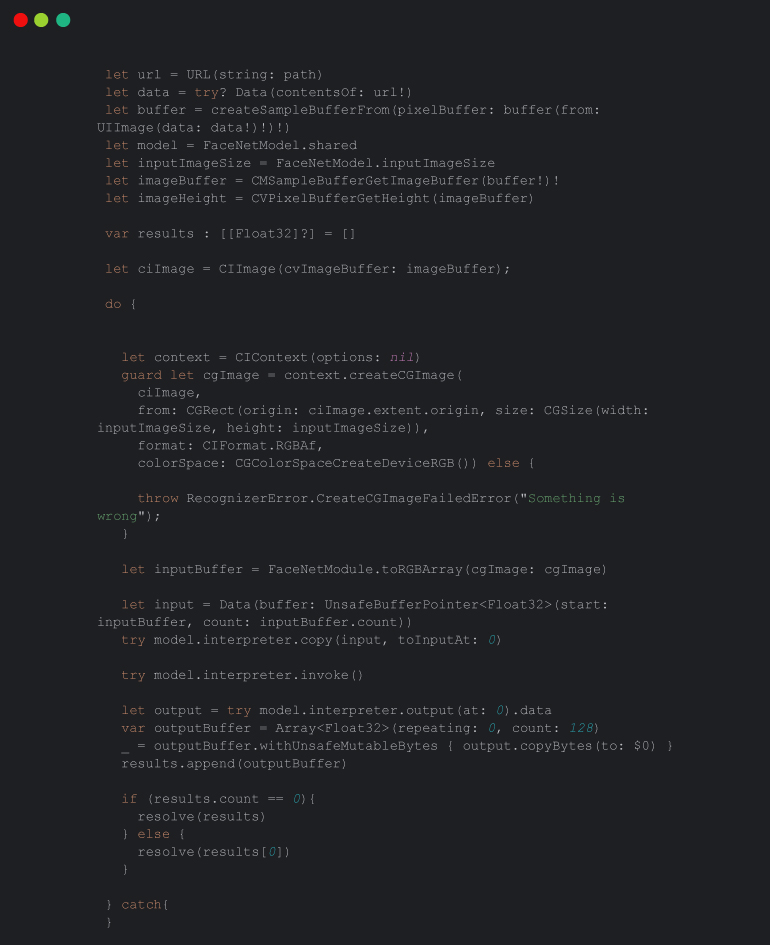

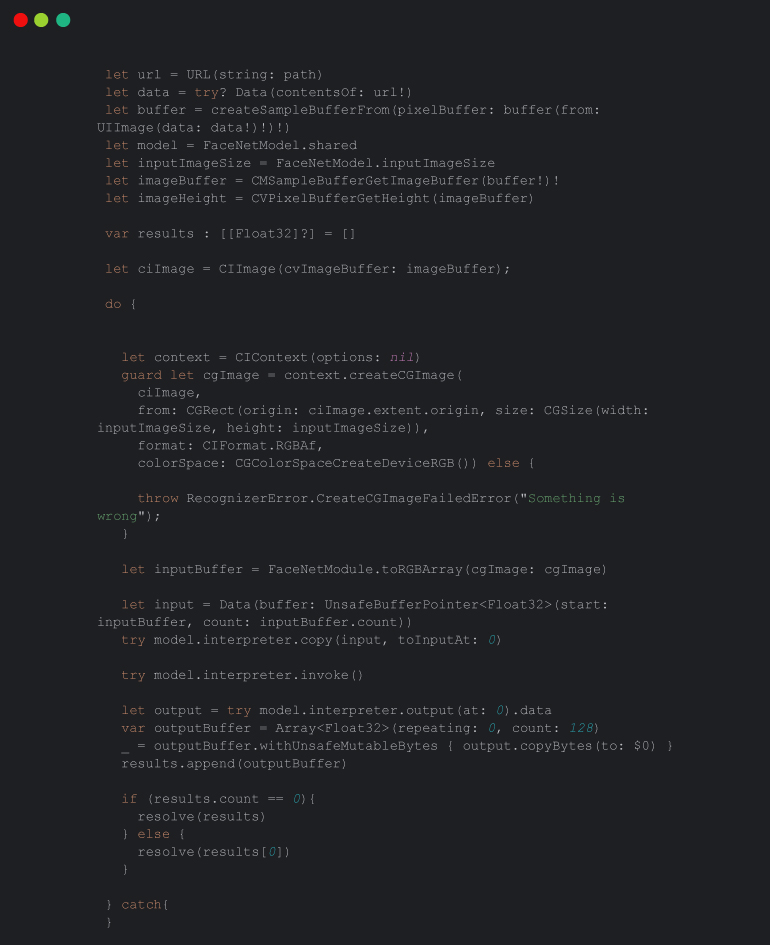

Fetch Face Vector in IOS

In iOS, we created the “FaceNetModule” in Goal-C. We uncovered the “getFaceVector” methodology to course of pictures for face recognition.

That is implementation of get faceVector in iOS

Why embeddings

Embeddings are elementary to the method as they condense all very important Information from a picture right into a compact vector. FaceNet, particularly, compresses a face right into a 128-number vector. The thought is that embeddings of comparable faces might be alike. In machine studying options, remodeling high-dimensional information (like pictures) into low-dimensional representations (embeddings) is frequent. Since embeddings are vectors, they are often interpreted as factors in a Cartesian coordinate system, that permit customers to plot a picture of a face on this system utilizing its embeddings.

Outcomes and Efficiency

Our utility demonstrates excessive accuracy in face detection, a testomony to FaceNet’s sturdy algorithm. Accuracy is essential in lots of functions, and attaining IT is a noteworthy accomplishment. Moreover, the appliance’s pace and effectivity in face detection are spectacular, which makes IT sensible for real-world functions the place immediate responses are important.

Challenges and Learnings

Integrating FaceNet into React Native posed distinctive challenges, particularly in bridging native modules and guaranteeing clean interplay between JavaScript and native code. This course of supplied deep insights into React Native’s workings and the intricacies of integrating superior machine studying fashions like FaceNet. Overcoming these challenges required a profound understanding of each React Native and FaceNet, contributing to a big studying expertise.

Conclusion and Future Instructions

Ultimately, IT is obvious how React Native’s capability for creating refined functions paves the way in which for future potentialities. IT happens so with the profitable integration of FaceNet for face detection. The probabilities are:

- Actual-time Face Recognition: Constructing upon the established face detection basis, you could possibly enterprise into real-time face recognition. IT opens doorways to varied functions like machine unlocking or customized UX.

- Augmented Actuality (AR) Options: Face detection and recognition are key parts of many AR functions. We might discover incorporating AR options into your utility that permit customers to work together with digital objects based mostly on their facial expressions and actions.

- Improved Consumer Expertise: Using FaceNet might additional improve the person expertise by providing customized content material, suggestions, or security measures, like tailoring content material based mostly on acknowledged faces in images or movies.

If you’re elated by this Technology and need to be taught extra, you will need to contact Xavor for thrilling and intriguing providers and Information.

FAQs

Q: What are the challenges of face detection and recognition?

A: Challenges embrace dealing with various lighting situations, and occlusions, and guaranteeing accuracy in various facial expressions.

Q: How do I take advantage of FaceNet for face recognition?

A: Make the most of FaceNet by extracting facial options and using a high-dimensional house for correct face comparisons.

Q: How do you implement face recognition in React Native?

A: Implement face recognition in React Native by integrating libraries like react-native-camera and face-api.js for environment friendly growth.

Q: What are the principle processing steps used to implement a face recognition system?

A: The primary processing steps contain face detection, function extraction, and matching in opposition to a database for correct and dependable face recognition.