Writer: Scott Rouse, Options Author

Over 20 years in the past, I sat in a lecture corridor whereas a professor talked excitedly about synthetic neural networks (ANNs) and their potential to remodel computing as we knew IT. If we consider a neuron as a fundamental processing unit, then creating a man-made community of them can be akin to multiplying processing energy by a number of components and changing the standard view of every laptop having one central processing unit, or CPU.

I discovered the idea of modeling the neurons of the human mind compelling, however frustratingly inaccessible. For one factor: how? How precisely will we translate the firing of neurons, a fancy electro-chemical occasion that isn’t but totally understood, and apply IT to computing? That is earlier than we even get into neural communication, the coordinated exercise of neural networks and the much more complicated questions surrounding consciousness. How precisely will we get computer systems to ‘suppose’ in the identical method that we do? Again then, I had a laptop computer that was an inch thick and able to a comparatively restricted variety of duties. IT appeared virtually unfathomable to suppose that these concepts might be put into follow alongside the present incarnation of silicon-based chips.

IT would take 10 years earlier than a analysis paper from Google modified the whole lot. In 2017, a group of researchers at Google revealed a paper with an unassuming title: Consideration Is All You Want. Few might have predicted that this work would mark the start of a brand new epoch in synthetic intelligence. The paper launched the ‘transformer’ structure – a design that allowed machines to be taught patterns in language with unprecedented effectivity and scale. Inside just a few years, this concept would evolve into giant language fashions (LLMs), the kind of which was popularised by OpenAI’s ChatGPT. IT was the inspiration of programs able to reasoning, translating, coding and conversing with near-human fluency. However this was not step one.

The price of creating AI is, by any measure, extraordinary

A yr earlier, Google’s Exploring the Limits of Language Modeling had proven that scaling synthetic neural networks – in information, parameters and computation – yielded a predictable, regular rise in efficiency. Collectively, these two insights – scale and structure – set the stage for the period of generative AI. Right this moment, these fashions underpin almost each frontier of AI analysis. But their emergence has additionally caused a deeper query: might programs like these, or others impressed by the human mind, lead us to synthetic normal intelligence (AGI) – machines that be taught and purpose throughout domains as flexibly as we do?

There at the moment are two distinct paths of analysis for AGI. The primary are LLMs – educated on large quantities of textual content by means of self-supervised studying, they show strikingly broad competence in a number of key areas: reasoning, coding, translation and artistic writing. The suggestion right here – and a large leap from lecture corridor discussions about ANNs – is that generality can emerge from scale and structure.

But the intelligence of LLMs stays disembodied. They lack grounding within the bodily world, persistent reminiscence, and self-directed objectives. And this is likely one of the central philosophical arguments that hampers the legitimacy of this path for AGI. Our capacity to be taught is arguably grounded in expertise, our capacity to understand the world we stay in and actively be taught from IT. If AGI ever arises from this lineage, IT is probably not by means of language fashions alone, however by means of programs that mix their linguistic fluency with notion, embodiment, and continuous studying.

The opposite path

If LLMs characterize the abstraction of intelligence, entire mind emulation (WBE) is its reconstruction. The idea – articulated most clearly by Anders Sandberg and Nick Bostrom of their 2008 paper Entire Mind Emulation, A roadmap – envisions making a one-to-one computational mannequin of the human mind. The paper describes WBE as “the logical endpoint of computational neuroscience’s makes an attempt to precisely mannequin neurons and mind programs.” The method, in principle, would contain three phases: scanning the mind at nanometre decision, changing its construction right into a neural simulation, and working that mannequin on a strong laptop.

If profitable, the end result wouldn’t merely be synthetic intelligence – IT can be a continuation of an individual, maybe with all their recollections, preferences, and identification intact. WBE, on this sense, goals to not imitate the mind however to instantiate IT.

Working from 2013 to 2023, a large-scale European analysis initiative known as the Human Mind Venture (HBP) aimed to additional our understanding of the mind by means of computational neuroscience. AI was not a part of the preliminary proposal for the challenge, however early successes with neural web deep studying inarguably contributed to its inclusion.

A yr earlier than the challenge began, in what’s sometimes called the ‘Massive Bang’ of AI, the event of a picture recognition neural community known as AlexNet rewrote the ebook on deep studying. Leveraging a big picture dataset and the parallel processing energy of GPUs was what enabled researchers on the College of Toronto to coach AlexNet to establish objects from photos.

Because the 10-year evaluation report for the HBP concludes, researchers realised that “deep studying strategies in synthetic neural networks might be systematically developed, however they usually contain parts that don’t mirror organic processes. Within the final section, HBP researchers labored in direction of bridging this hole.” IT is that this, the mirroring of organic processes, which the patternist philosophy wrestles with. IT is the concept issues akin to consciousness and identification are ‘substrate impartial,’ and are held in patterns that might efficiently be emulated by a pc.

As Sandberg and Bostrom famous, “if electrophysiological fashions are sufficient, full human mind emulations must be potential earlier than mid-century.” Whether or not lifelike or not, this stays one of many few really bottom-up approaches to AGI – one which makes an attempt to construct not a mannequin of the thoughts, however a thoughts itself.

IT is maybe no shock that LLMs have taken off when WBE nonetheless appears to be within the realm of science fiction. IT is undoubtedly a more durable promote for traders, regardless of how alluring to selfish billionaire varieties the thought of with the ability to copy your self could also be.

The value of intelligence

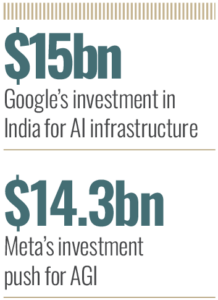

The price of creating AI is, by any measure, extraordinary. The Wall Avenue Journal not too long ago reported that Google will make investments $15bn in India for AI infrastructure over the following 5 years. The Related Press indicated that Meta has struck a take care of AI firm Scale and can make investments $14.3bn to fulfill CEO Mark Zuckerberg’s “rising concentrate on the summary thought of ‘superintelligence’” – in different phrases, a direct pivot in direction of AGI.

These are large numbers, particularly contemplating that the EU awarded Henry Markram, co-director of the HBP, simply €1bn to run his 10-year mission to construct a working mannequin of the human mind. Past company bulletins, analysis institute Epoch AI stories that “spending on coaching large-scale ML (machine studying) fashions is rising at a fee of two.4x per yr” and analysis by market information platform Pitchbook reveals that in 2024 funding in generative AI leapt up 92 p.c year-on-year, to $56bn.

For traders, the chance profile of AGI analysis is, to place IT mildly, aggressive. The potential ROI relies upon not solely on breakthroughs in mannequin effectivity, but additionally on solely new paradigms – reminiscence architectures, neuromorphic chips and multimodal studying programs that carry context and continuity to AI.

Bridging two hemispheres

Giant language fashions and entire mind emulation characterize two very totally different roads in direction of the identical vacation spot: normal intelligence. To my thoughts, IT appears that neither one can do IT alone. LLMs take a top-down path, abstracting cognition from the patterns of language and behavior, discovering intelligence by means of scale and statistical construction. WBE, against this, is bottom-up. IT seeks to copy the organic mechanisms from which consciousness arises. One treats intelligence as an emergent property of computation; the opposite, as a bodily course of to be copied in full constancy.

But these approaches might finally converge, as advances in neuroscience inform machine studying architectures, and artificial fashions of reasoning encourage new methods to decode the dwelling mind. The search for AGI might thus finish the place each paths meet: within the unification of engineered and embodied thoughts.

Spending on coaching large-scale machine studying fashions is rising at a fee of two.4x per yr

In making an attempt to reply what makes a thoughts work, we discover that the pursuit of AGI is, at its coronary heart, a type of introspection. If the patternists are proper and the thoughts is substrate-independent, a reproducible sample relatively than a organic phenomenon, then its replication in silicon will profoundly alter how we view the ‘self’ if we ever do replicate the thoughts in a machine. That stated, if the endpoint of humanity is being digitally inserted right into a Teslabot to serve out eternity fetching a Weight loss program Coke for the likes of Elon Musk, then IT is perhaps extra prudent to advise restraint.

Jensen Huang, CEO of Nvidia believes that “synthetic intelligence would be the most transformative Technology of the twenty first century. IT will have an effect on each business and facet of our lives.” After all, as the person main the world’s foremost provider of AI compute chips, he has a vested curiosity in making such statements.

Maybe IT is finest then, to mood such optimism and depart you with the late Stephen Hawking’s warning: “success in creating AI can be the most important occasion in human historical past. Sadly, IT may also be the final, until we discover ways to keep away from the dangers.”

👇Observe extra 👇

👉 bdphone.com

👉 ultractivation.com

👉 trainingreferral.com

👉 shaplafood.com

👉 bangladeshi.help

👉 www.forexdhaka.com

👉 uncommunication.com

👉 ultra-sim.com

👉 forexdhaka.com

👉 ultrafxfund.com

👉 bdphoneonline.com

👉 dailyadvice.us